The Apple Vision Pro caused a lot of buzz around the tech world and continues to draw attention. A new app allows the mixed reality headset to control a robot using your head and hand gestures.

Explore Tomorrow's World from your inbox

Get the latest innovations shaping tomorrow’s world delivered to your inbox!

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

Controlling a Robot

Scientists developed an app that allows the user to control a robot with their head and hands. The app called “Tracking Streamer” tracks human movements and streams the data over a WiFi connection to a robot on the same network. It specifically tracks the movements of the head, wrist, and fingers. The robot then translates the data into similar movements. Researchers published their findings on GitHub. Their system tracks 26 points on the hand and wrists, separate points on the head, and even how high off the ground a user is.

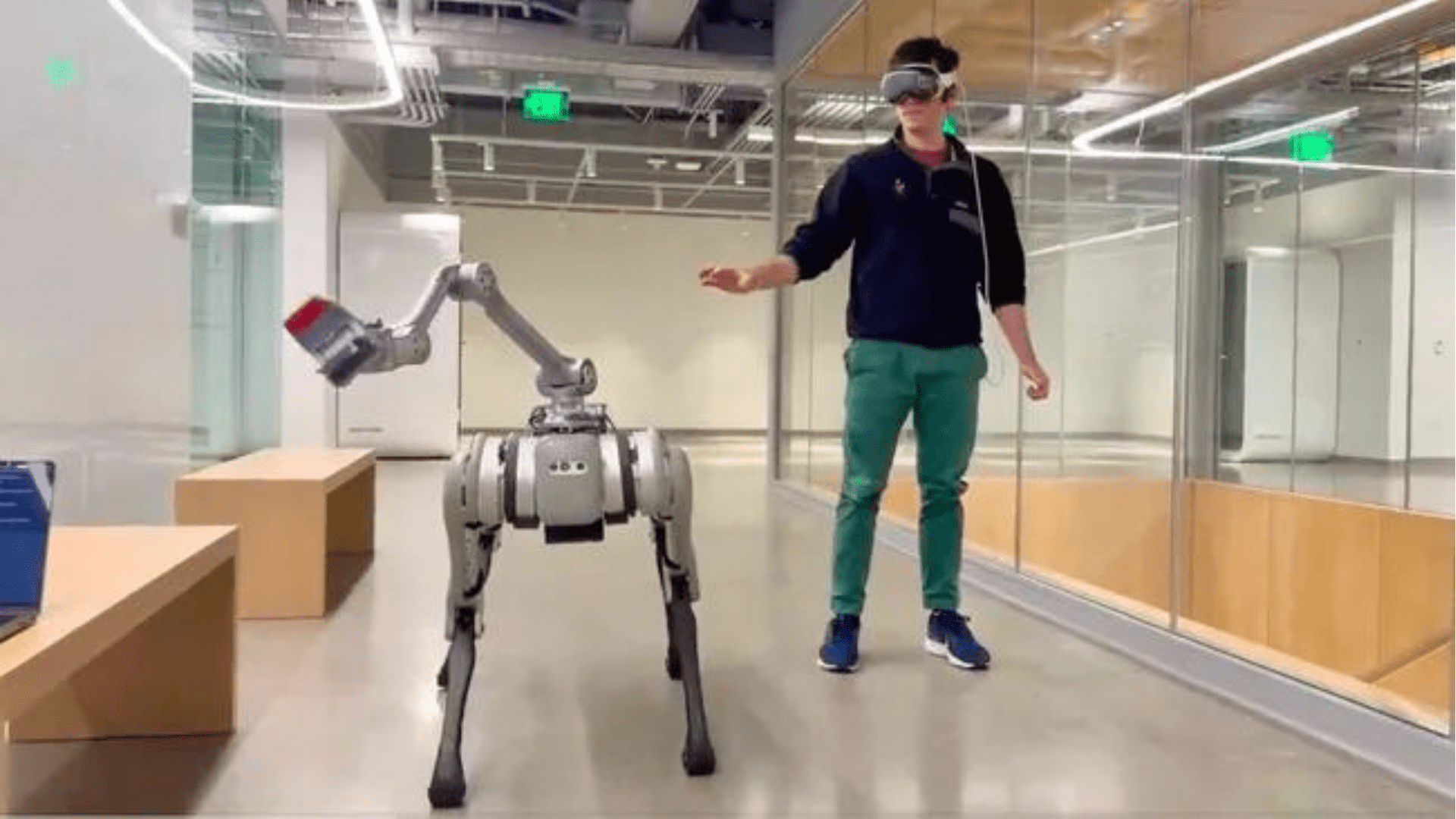

The app’s developer, Younghyo Park, is a doctoral student at MIT and published a video of the app in action on X, formerly known as Twitter. In the video below, the co-author of the study and grad student at MIT, Gabe Margolis demonstrates how the app works. You can see him controlling the four-legged robot using his hands and body movements.

While demonstrating how the app works, Margolis commands the robot to open a closed door with its gripper and enter through it. In addition, Margolis controls the robot to pick up a piece of trash and throw it into the garbage. There is also a moment in the video where the robot mimics his movements and bends down when Margolis does.

Restrictions and Limitations

The Apple Vision Pro comes with a lot of upsides but there are also limitations. Because the device relies on movements, there are restrictions in tight spaces like elevators or moving vehicles. In addition, users must be aware of hand positioning to ensure accurate tracking. For example, tracking is limited when a user’s hands are by their side or on their waist.

However, researchers believe there is a lot of potential in combining the Apple Vision Pro and robotics. In the paper, Park and Margolis the continued use of the Apple Vision Pro provides more data that can be fed into teaching robots how to move. They say there are plans to add more features for robotic applications.