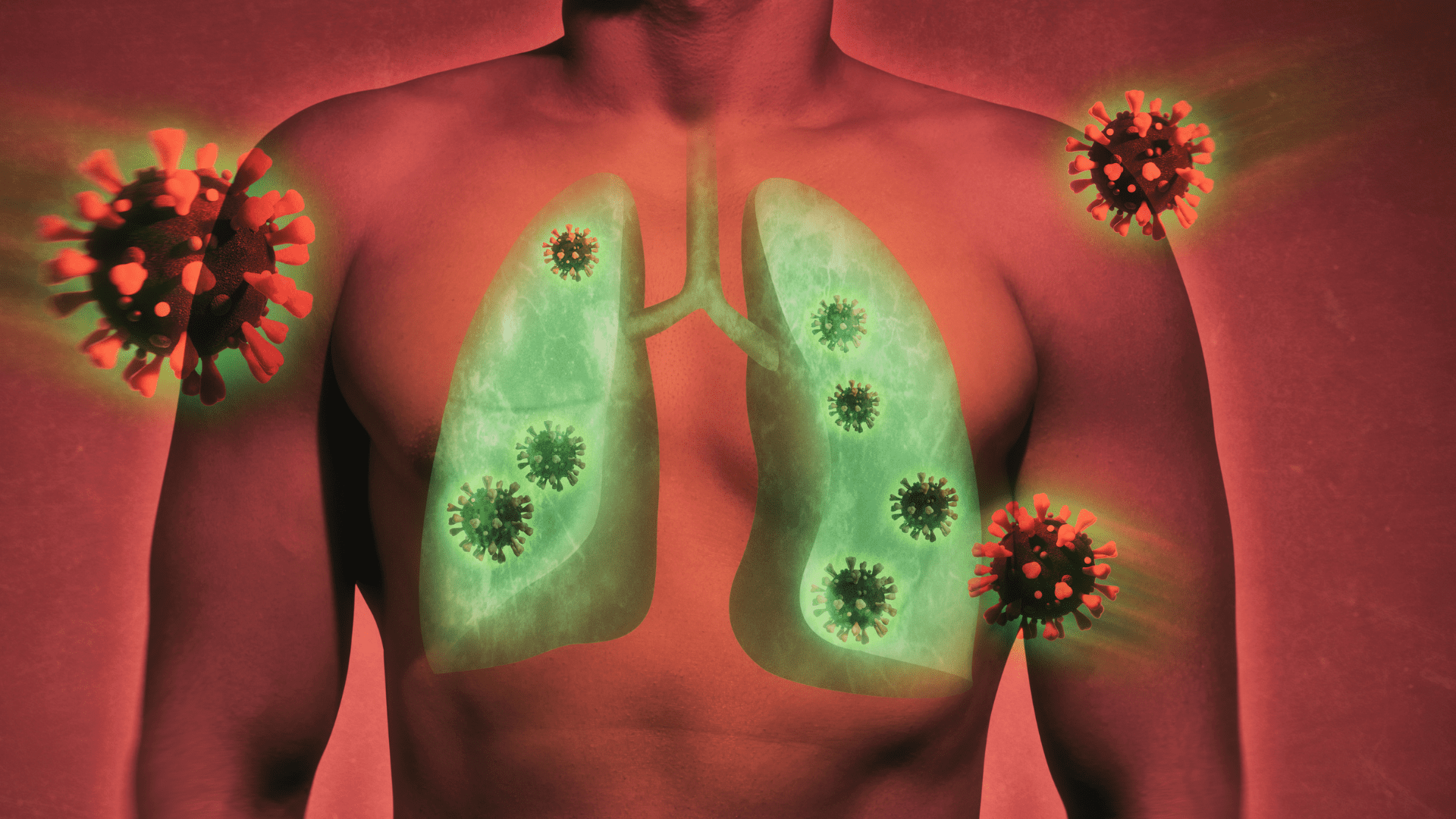

New research shows artificial intelligence can spot COVID-19 in a patient’s lungs, similar to facial recognition. According to researchers at Johns Hopkins University, AI can detect the virus in lung ultrasound images.

The discovery brings medical professionals closer than ever to quickly diagnosing COVID and other pulmonary diseases using algorithms. These algorithms sift through a significant amount of ultrasound images to spot signs of diseases.

Ongoing Efforts

Researchers began their study in the early days of the pandemic when clinicians needed tools to relieve overwhelmed emergency rooms. Senior author of the study Muyinatu Bell said, “We developed this automated detection tool to help doctors in emergency settings with high caseloads of patients who need to be diagnosed quickly and accurately.”

Bell is also an associate professor at Johns Hopkins University. He said using artificial intelligence to detect certain diseases like COVID-19 holds potential. “Potentially, we want to have wireless devices that patients can use at home to monitor progression of COVID-19, too.” In addition, researchers say there is potential outside of COVID-19.

Like COVID-19, the AI tools show potential for wearables that track diseases like congestive heart failure that could lead to an overload of fluids in a patient’s lungs. This is according to the co-author and assistant professor at Johns Hopkins University Tiffany Fong. Fond said, “What we are doing here with AI tools is the next big frontier for point of care.” She continued, “An ideal use case would be wearable ultrasound patches that monitor fluid buildup and let patients know when they need a medication adjustment or when they need to see a doctor.”

Explore Tomorrow's World from your inbox

Get the latest innovations shaping tomorrow’s world delivered to your inbox!

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

AI Analyzing Images

Researchers combine computer-generated images and real ultrasound images from patients and AI analyzes them to spot features known as B-lines. These features appear as bright, vertical abnormalities that indicate inflammation in patients with pulmonary complications.

“We had to model the physics of ultrasound and acoustic wave propagation well enough in order to get believable simulated images,” Bell said. “Then we had to take it a step further to train our computer models to use these simulated data to reliably interpret real scans from patients with affected lungs.”

AI Then and Now

Researchers struggled to use AI in the early stages of the pandemic. Bell said they lacked enough data and were only beginning to understand how COVID-19 manifests inside a patient’s body. Bell and her team developed software that learns from a mixture of real and simulated data. Then, they distinguish abnormalities in the images to determine if someone contracted COVID. The type of AI software they developed acts like interconnected neurons that allow the brain to recognize patterns and understand speech.

Because of the lack of data in the early stages of the pandemic, the study’s first author Lingyi Zhao said, “Our deep neural networks never reached peak performance.” Zhao said, “Now, we are proving that with computer-generated datasets we still can achieve a high degree of accuracy in evaluating and detecting these COVID-19 features.”