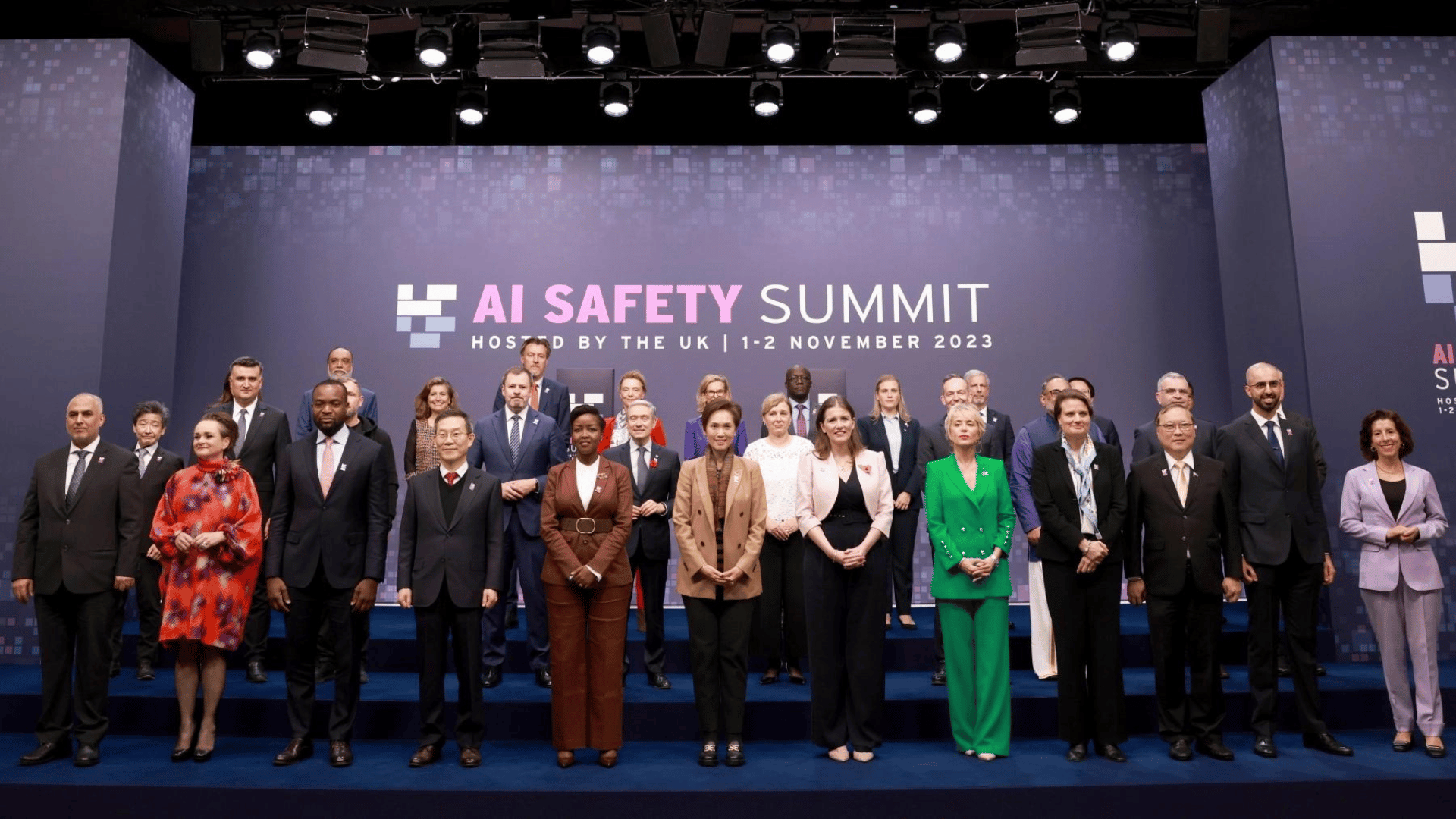

AI is rapidly changing the world, but who is setting the rules? On November 1 and 2, tech experts and global leaders from 27 countries and the European Union participated in the UK’s AI Safety Summit. The summit aimed to establish some ground rules and foster international collaboration on artificial intelligence. Here’s what you should know.

1. A First-of-Its-Kind Agreement Signed

On November 1, the Bletchley Declaration on AI Safety was signed by representatives and companies of 28 countries, including the US, China, and the EU, in a first-of-its-kind agreement. The statement aims to identify the safety risks of AI, including cybersecurity, biotechnology, misinformation, transparency, and accountability.

The declaration recognized that the risks of AI are best addressed through international cooperation. As the declaration stated, “We resolve to work together in an inclusive manner to ensure human-centric, trustworthy and responsible AI that is safe, and supports the good of all through existing international fora and other relevant initiatives, to promote cooperation to address the broad range of risks posed by AI.”

2. King Charles III Compares AI to Splitting Atom

At the summit, Britain’s King Charles III sent in a video speech in which he compared the development of AI to the significance of splitting the atom and harnessing fire. Describing AI as one of the greatest technological leaps in human history, Charles said that the technology could help cure medical conditions, quicken the journey to net zero, and unlock potentially limitless clean green energy.

However, he also warned of the significant risks that come with AI. He said, “There is a clear imperative to ensure that this rapidly evolving technology remains safe and secure…Because AI does not respect international boundaries, this mission demands international coordination and collaboration.”

3. Kamala Harris Highlights AI’s Threats

Representing the United States, Vice President Kamala Harris gave a speech that highlighted the current concerns of AI, like discrimination and misinformation. She stressed that AI dangers are already affecting marginalized groups and democratic information.

Harris’s speech echoed the sentiments of the Executive Order that President Joe Biden issued last week. The Executive Order establishes new standards for AI safety in the United States by requiring tech firms to submit test results for powerful AI systems to the government before they are released to the public.

4. UK to Invest in Supercomputer

The United Kingdom announced it will invest £225 million ($277 million) in a new AI supercomputer called Isambard-AI after the 19th-century British engineer Isambard Brunel. Powered by NVIDIA, the University of Bristol will house the machine that is reportedly 10 times faster than the UK’s current quickest machine.

The computer is expected to come online in 2024 and will be able to help organizations with everything from automated drug discovery to robotics to national security. be able to help organizations. As Ian Buck, the Vice President of Hyperscale and HPC at NVIDIA, said in a statement, “Isambard-AI will provide researchers with the same state-of-the-art AI and HPC compute resources used by the world’s leading AI pioneers, enabling the UK to introduce the next wave of AI and scientific breakthroughs.”

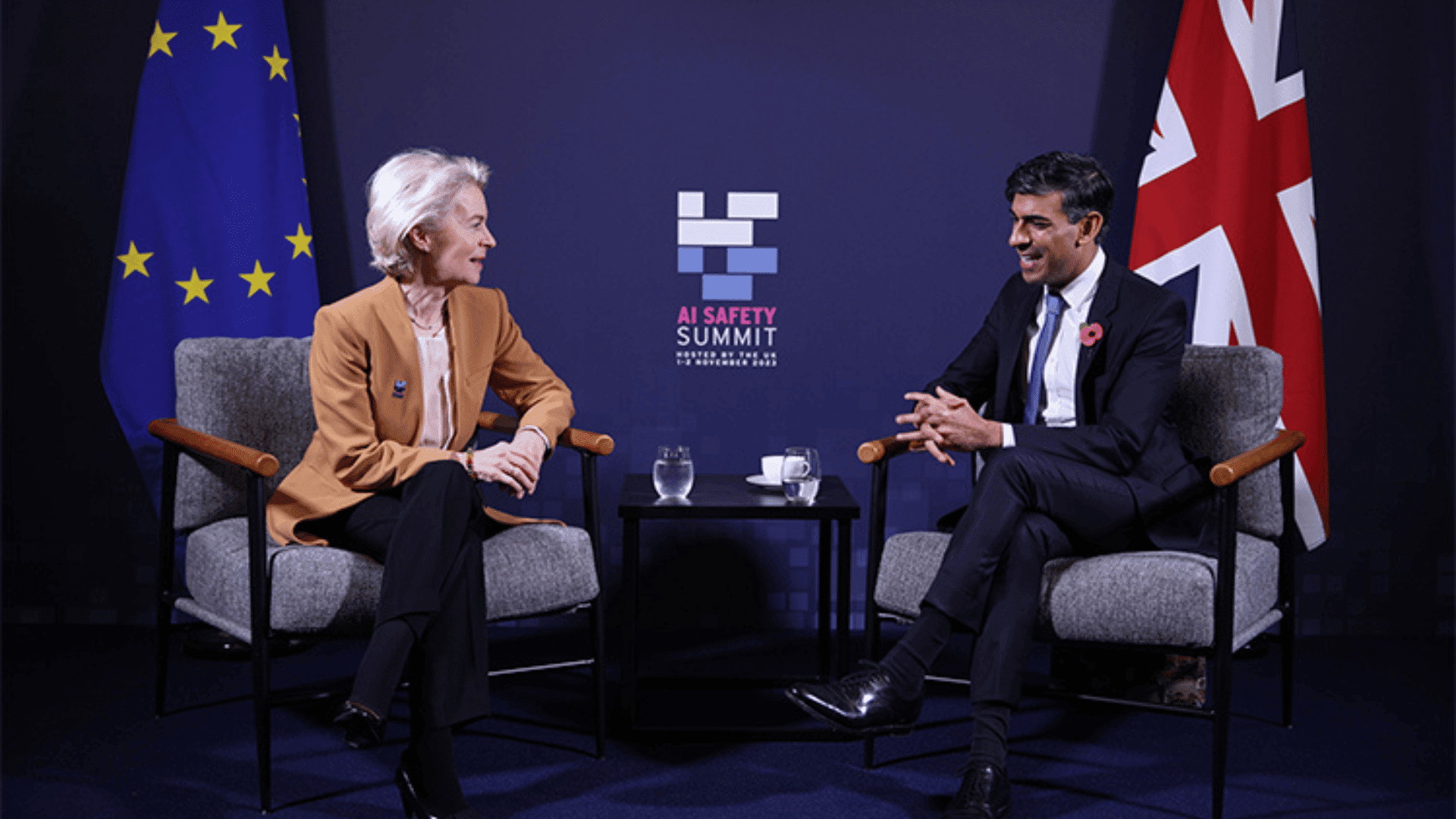

5. EU Working on AI Legislation

In a speech, the president of the European Commission Ursula von der Leyen urged for a system of scientific checks and balances that has universally accepted safety standards. She warned how quantum physics led to triumphs of nuclear energy but also the horrors of the atomic bomb.

von Der Leyen also noted that the EU’s AI Act is in the final stages of the legislative process. She also described the potential of founding a European AI Office that would “deal with the most advanced AI models, with responsibility for oversight” and work with the scientific community around the world.