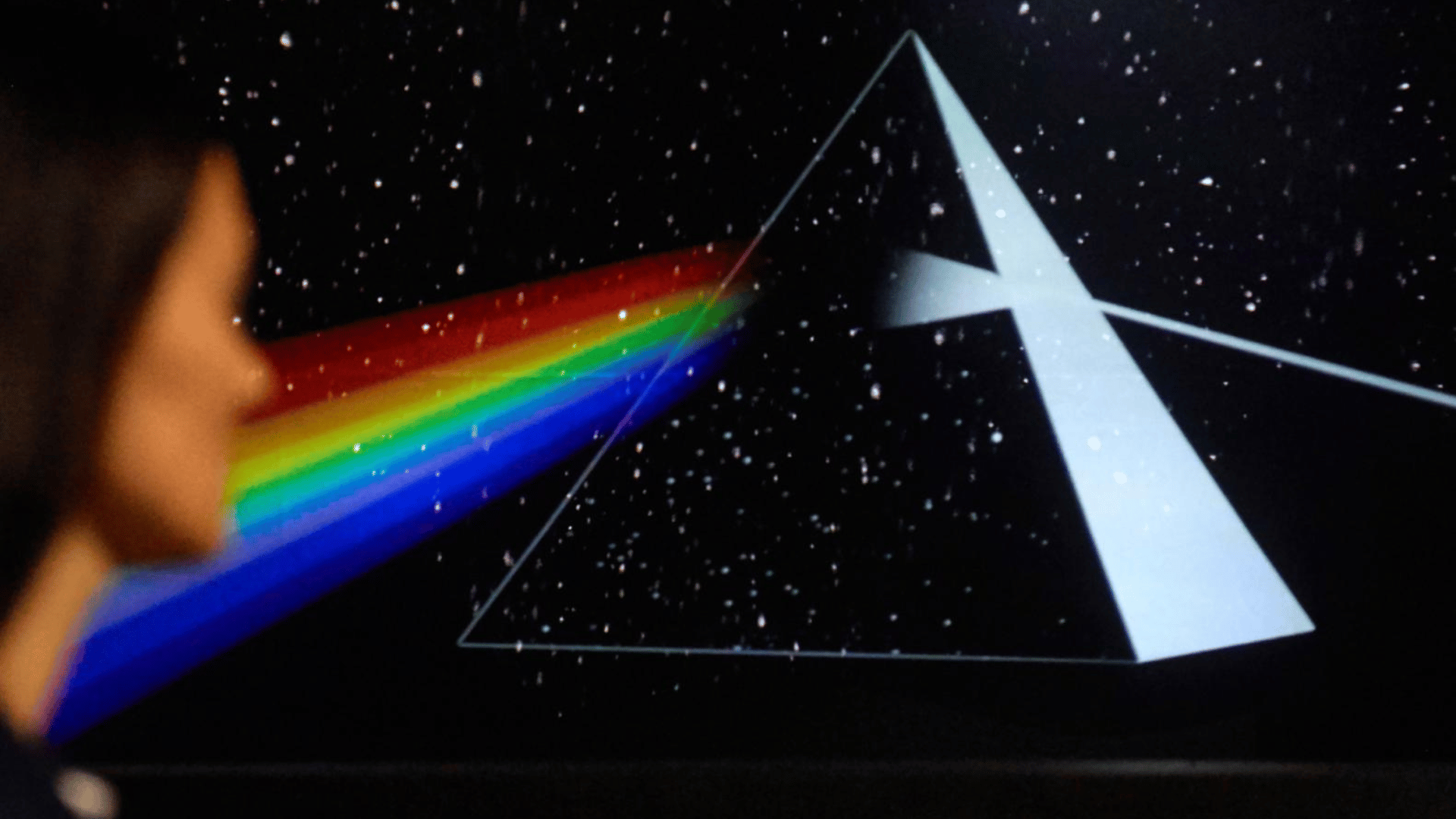

A recent research study used artificial intelligence to recreate Pink Floyd’s “Another Brick in the Wall, Part 1” from participants’ brain activity. The study marks the first recognizable song that has been reconstructed from direct brain recordings.

The Study & Its Implications

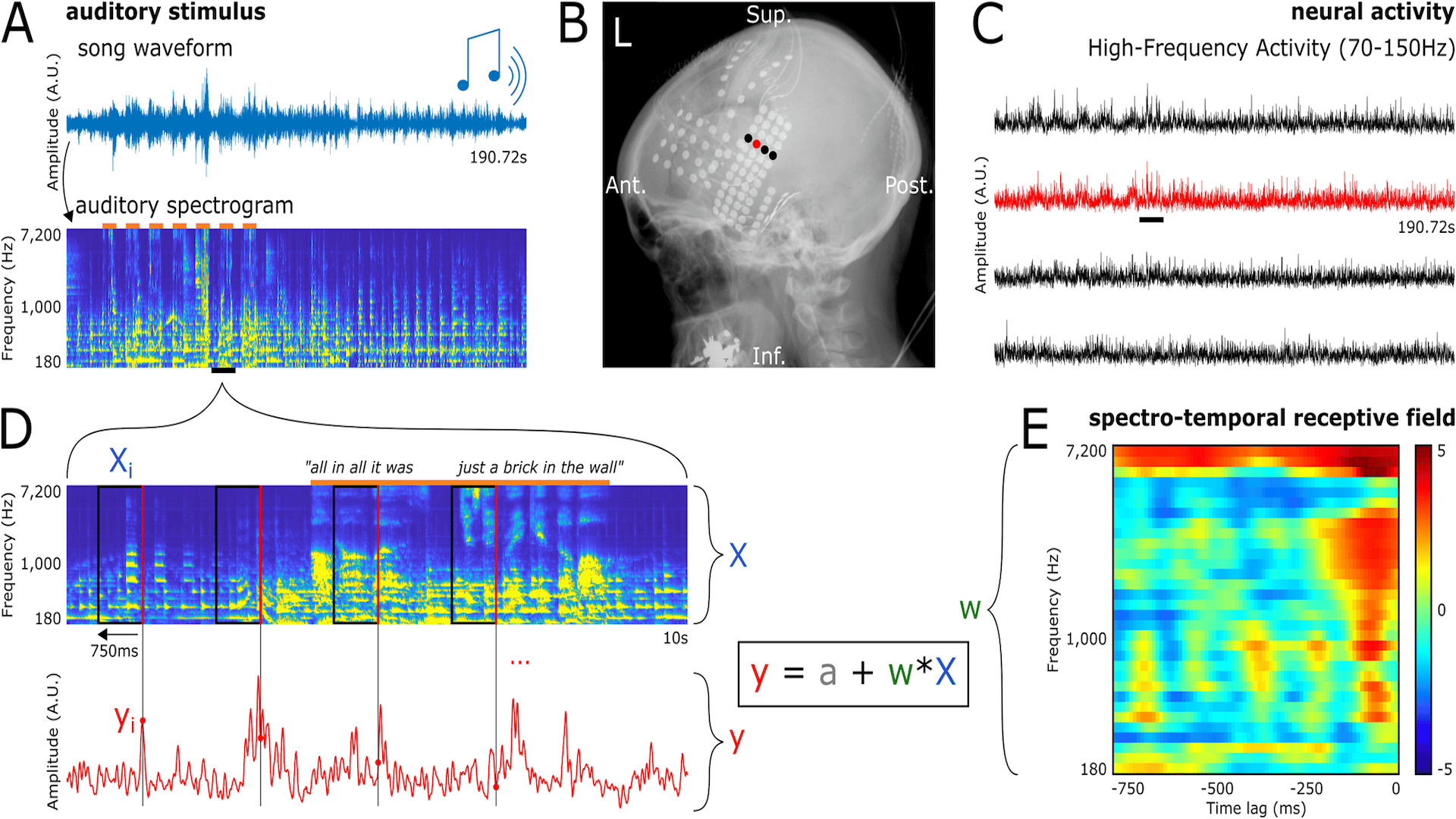

The study analyzed data from 29 people who are already being monitored for epileptic seizures. To measure brain activity, postage-stamp-sized arrays of electrodes were placed directly on the surface of each participant’s brain. As a participant listened to the Pink Floyd song, the electrodes captured the electrical activity of several brain regions attuned to musical elements such as tone, rhythm, harmony, and lyrics.

The researchers then used an AI model to turn that brain activity into sound. The model was trained to decipher data captured from thousands of electrodes and analyze patterns in the brain’s response to various components of the song’s profile. For example, researchers found that some portions of the brain’s audio processing center respond more to a voice or a synthesizer, while other areas prefer sustained hums.

Another AI model then reassembled the composition to estimate the sounds that the patients heard. Once the brain data were fed through this model, the music returned; the lyrics were slightly garbled, but the melody was intact enough to replicate Pink Floyd’s distinctive song.

The song “Another Brick in the Wall, Part 1” was intentionally chosen because of its layered and complex nature. As Ludovic Bellier, the study’s lead author, explained, “[The song] brings in complex chords, different instruments, and diverse rhythms that make it interesting to analyze.” Nonetheless, the song was not only picked for scientific reasons. The cognitive neuroscientist added, “The less scientific reason might be that we just really like Pink Floyd.”

Even though this study focused on music, the researchers expect their results to be used to translate brain waves into human speech, particularly to help people who have lost their ability to speak. The AI model can be used to improve brain-computer interfaces, which are assistive devices that record speech-associated brain waves and use algorithms to reconstruct intended messages.

Explore Tomorrow's World from your inbox

Get the latest innovations shaping tomorrow’s world delivered to your inbox!

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

Brain Activity Research

Many studies have been conducted to decode what people see, hear, or think from brain activity alone. For example, in 2012, a team first successfully reconstructed audio recordings of words participants heard while wearing implanted electrodes. Other studies have used similar techniques to reconstruct recently viewed pictures from participants’ brain scans.

A recent study from May even developed a noninvasive AI system that can translate a person’s brain activity into a stream of text. A semantic decoder was trained to match the participants’ brain activity to meaning by using OpenAI’s large language model GPT. Once the system was trained, the participants were scanned while they listened to a new story or imagined telling a story; the decoder could generate corresponding text from brain activity alone.