The Museum of Modern Art (MoMA) has recently unveiled a project called Refik Anadol: Unsupervised which marks another breakthrough in AI art. Running now through March 5, 2023, the artwork uses a sophisticated artificial intelligence model designed by the artist, Refik Anadol.

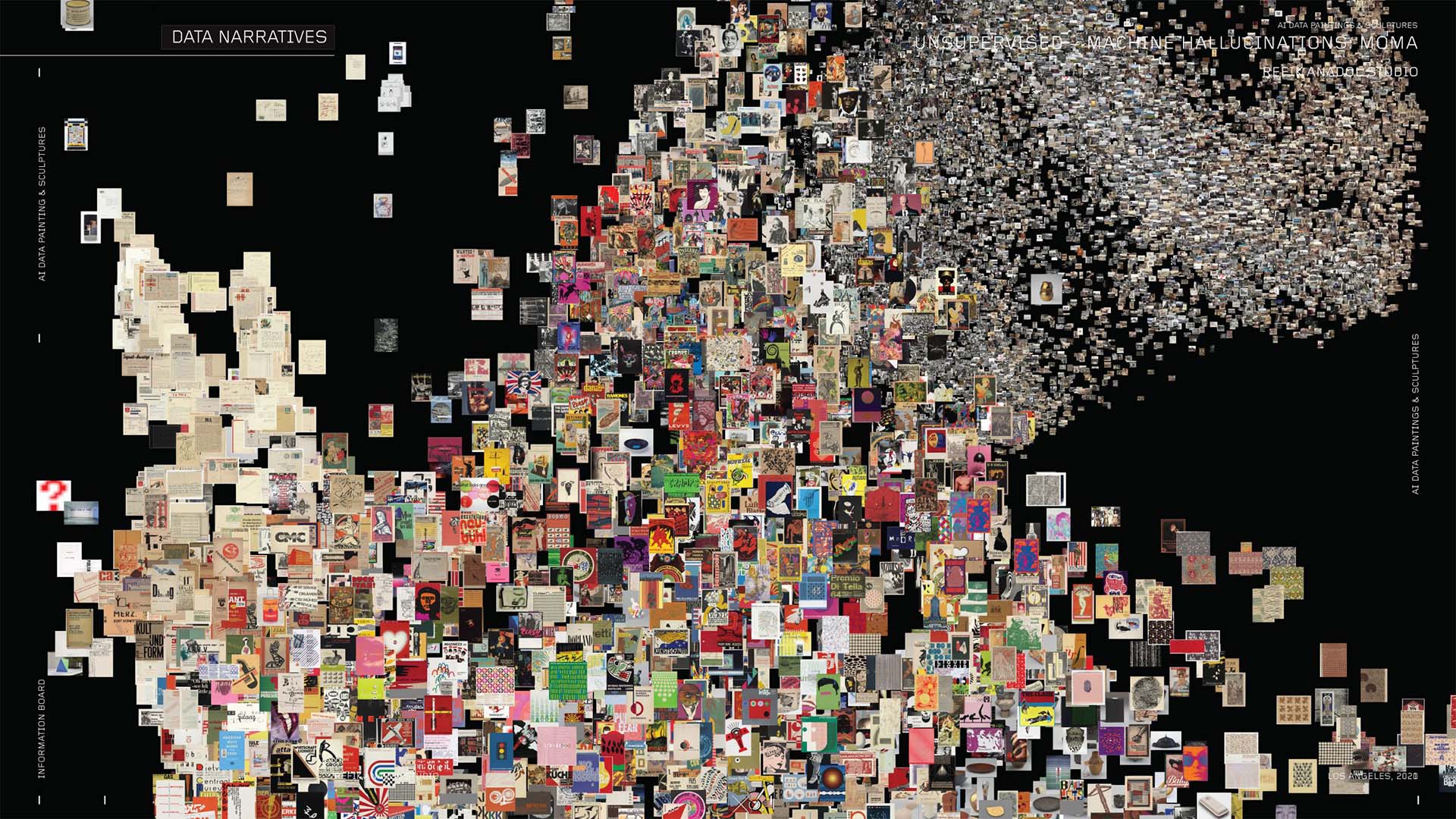

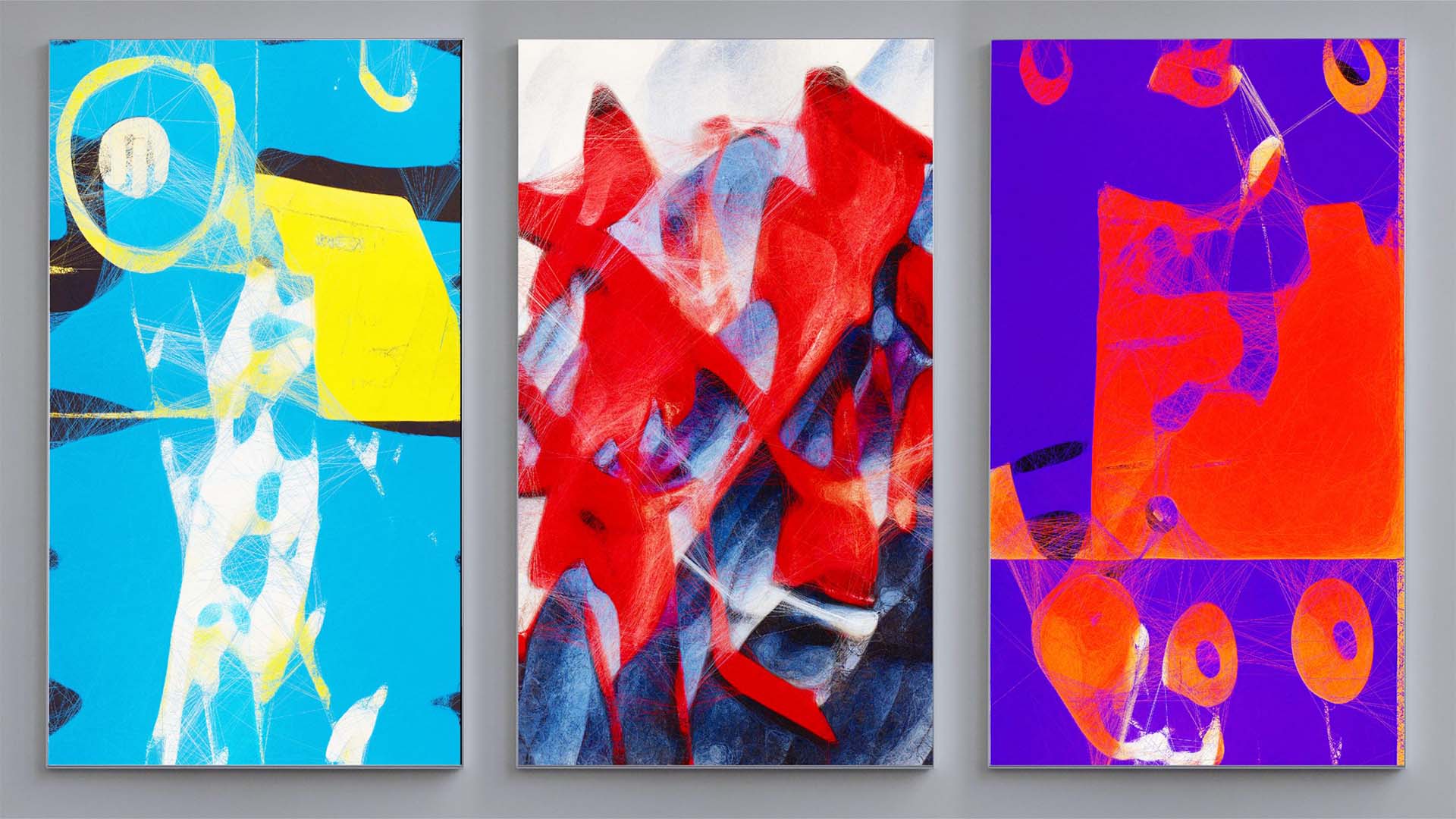

On their website, the first line MoMA uses to describe this exhibition is, “What would a machine dream about after the collection of The Museum of Modern Art?” Using 380,000 images and 180,000 art pieces from MoMA’s own collection, this exhibition aims to discover the answer to this question by using artificial intelligence to reinterpret and transform 200 years worth of art. Known for his media works and public installations, Anadol’s moving digital artworks unfold in real-time, continuously generating new and unique forms.

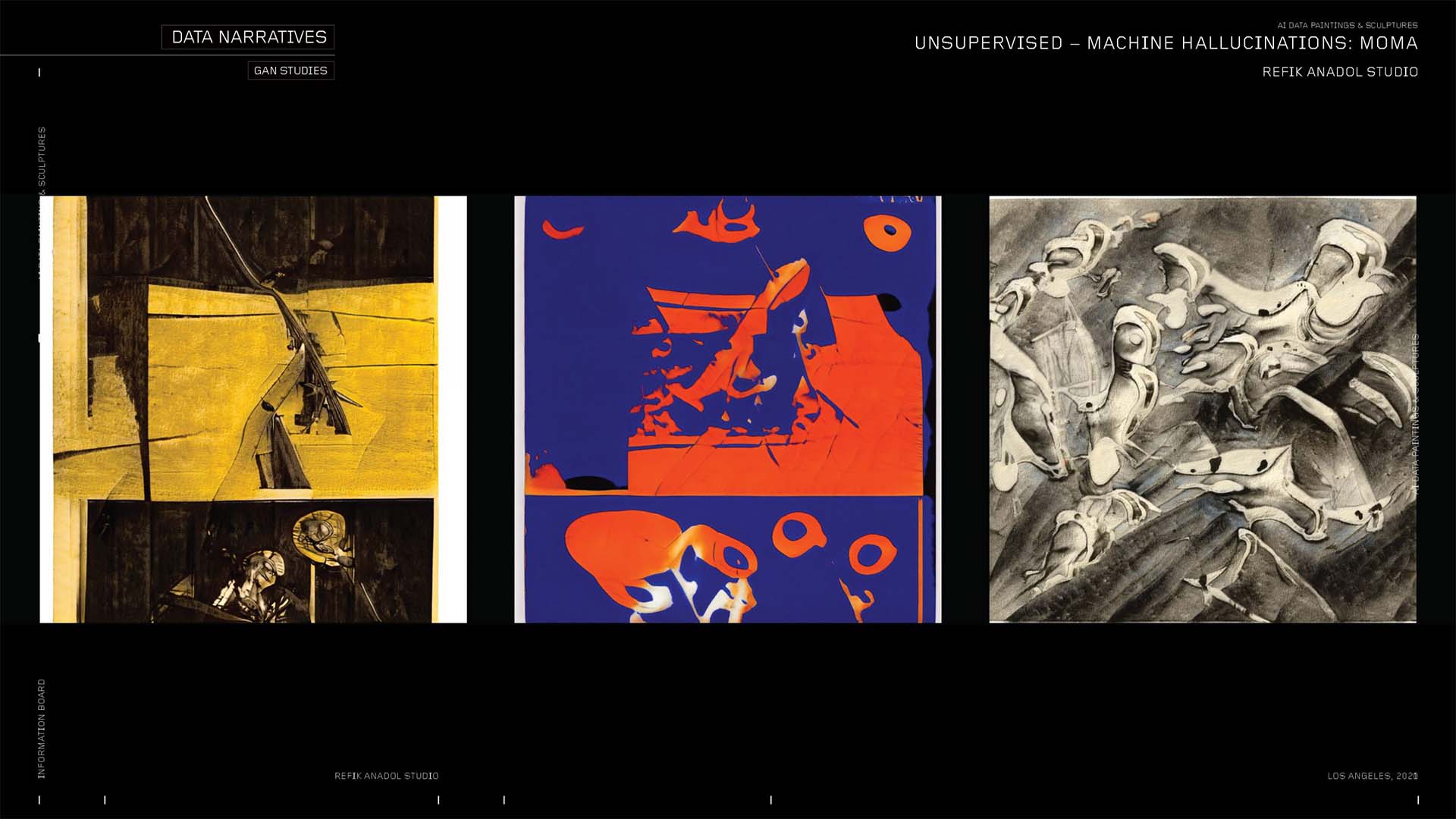

This exhibition was originally digitally created and published in 2021 on the platform Feral File as part of the artist’s Machine Hallucinations series that began in 2016. The version that was created in 2021 utilized several kinds of generative adversarial network (GAN) algorithms to create the generative artwork from the museum’s “publicly available data”. According to Fast Company, the team that worked on the AI that powers Machine Hallucination also trained using an Nvidia DGX Station A100, a desktop-sized box used for “AI Supercomputing.”

Anadol essentially trained a sophisticated machine-learning model to “walk” through its conception of the vast range of artworks in the MoMA collection and reimagine or “dream” of the history of modern art. The artist incorporated site-specific input from the environment of the Museum’s Gund Lobby which continuously affects the shifting imagery and sound.

MoMA’s senior curator Paola Antonelli said the new exhibit “underscores its support of artists experimenting with new technologies as tools to expand their vocabulary, their impact, and their ability to help society understand and manage change.”

The new exhibition is said to be different from the first online display. According to MoMA, the in-person exhibit will also monitor changes in movement, light, surrounding volume, and weather which could impact the moving images being displayed. On the museum’s website release, the artist stated, “I am trying to find ways to connect memories with the future… and to make the invisible visible.”

The type of GAN image generation used in this exhibition is different from diffusion models used to power the most modern AI art generators, such as Stable Diffusion and OpenAI’s DALL-E 2. Although they both use a blur to distort and reconstruct images, Diffusion is an iterative process that uses machine learning systems to attempt to generate “realistic” visual images. Alternatively, GANs are based on an “adversarial” system that creates “plausible data” and then uses a “discriminator” to decide whether certain parts of the image belong. While Diffusion models cluster images based on similar tags, Anadol’s GAN model lets the AI generate its own images “unsupervised”.

Michelle Kuo, a curator at MoMA who was a leader in organizing the exhibit, said in the release that “Often, AI is used to classify, process, and generate realistic representations of the world. Anadol’s work, by contrast, is visionary. It explores dreams, hallucination, and irrationality, posing an alternate understanding of modern art—and of artmaking itself.”

For more of the latest news, check out FDA’s first approved lab-grown meat, the first digital country in the metaverse, the genetically modified houseplant that can purify air, and a conversation with the co-founder of Butterfly Seating.